How do you acquire, combine, manipulate, analyze and utilize all the data you need to create top-quality AI applications without infringing on people’s rights to privacy?

Until recently, this was predominantly an ethical question. Now, it’s a legal one.

Over the past decade, some of the biggest names in tech have been caught playing fast and loose with people’s most sensitive data, while a series of breaches caused international scandals. Privacy advocates throughout Europe and North America called for interventions that would protect individuals against re-identification, fraud, data theft and generally having their right to privacy undermined. Regulatory bodies around the world responded by introducing stricter, more onerous rules.

As a result, organizations that deal in data have had their freedom to collect, store, move and sell user data severely curtailed. That includes limits on how long they can hold on to data provided by their customers and site visitors.

GDPR & CCPA: What Does It Mean For AI

The two most important and far-reaching of these developments are the General Data Protection Regulation (GDPR), set by the European Union, and the California Consumer Privacy Act (CCPA). Both sets of regulations outline consumer rights regarding their confidential data, aim to hinder the commercial exploitation of Big Data, and prevent companies from revealing, or even using, personal data without the clear consent of the people it relates to.

And these regulators are deadly serious about enforcement. Since GDPR was rolled out in 2018, hundreds of millions of dollars in fines have been issued, including a whopping $27.8 million fine handed to the Italian telecoms provider TIM in 2020.

The new(ish) rules protect user rights and will, it is hoped, frustrate the attempts of hackers to get their hands on sensitive data. For the AI industry, though, it creates a ton of headaches.

AI developers rely on swathes of data to create high-performance products. This data needs to be relevant, reliable and complete. It needs to provide plenty of context and nuance. It needs to combine historical data, sometimes stretching back years, with up-to-the-minute developments, in order to get a complete picture and make accurate predictions.

This leaves these would-be AI adopters and innovators with three options. They can play it safe, limiting the amount of data they collect and leaving their valuable data assets to languish in secure, but untouched, siloes. They can push the rules to breaking point, courting fines in the pursuit of technological progress. Or they can accept that using highly sensitive “real” data is too risky – but also, unnecessary.

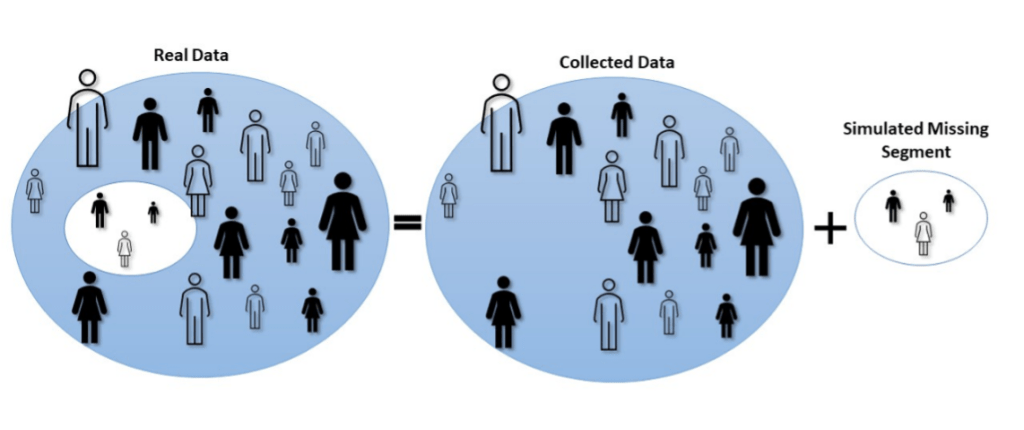

That’s because they could get around these issues at a stroke by simply using synthetic data instead. This artificially generates a brand new dataset from the underlying, real-world production data. Statistically, it’s the same as the original. The only difference is that the synthetic dataset doesn’t include the data of any real individuals, so there’s no one’s privacy to undermine. In that sense, it’s risk-free.

While there are all kinds of privacy-enhancing technologies (PETs) to choose from, this is the only one that addresses the demands of privacy regulations in full without hindering AI development at all. Companies can store their synthetic data however, wherever and for as long as they like. They can share it as they see fit. They can monetize or even sell it. They won’t be breaking any privacy rules.

Privacy and progress needn’t be at odds with one another. The right technology can deliver unrivaled privacy protection while, at the same time, making AI processes better, faster and more cost-effective. That’s the future of privacy regulations and AI, if synthetic data is brought into the mix.

Find out more about AI, privacy and data protection in our in-depth article, here >